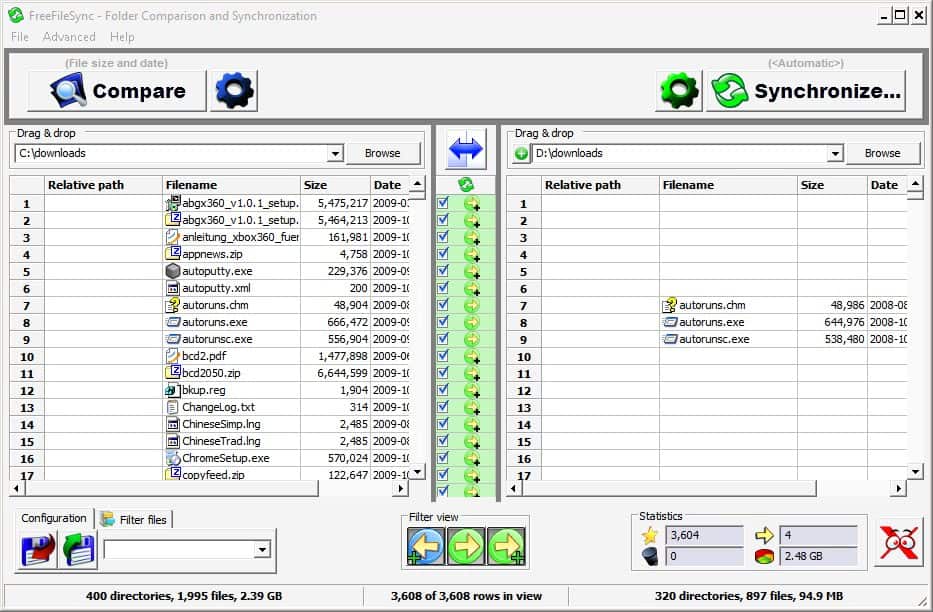

Measurements if anyone would like to do those separately, please post your I would need to re-run these to obtain objective time Roughly the same time as the control, but at least has the desired "smallįiles first" behavior. The fastest, taking less than half the time of the control. Setups #2 and #3 completed sooner than the control setup. The delay is only to ensure a proper comparison of performance against TEST SETUP 2. A batch file was used to start each of these jobs with a 2 second delay between each. The second listing is the same pair with the size filter. The first listing is the left-right pair with the size filter of. Test Setup 3: 6 job files each file contains two folder pair listings. A batch file was used to start each of the jobs in the same sequence as each pair appeared in SETUP 1, with a 2 second delay between each job start, to ensure the "large file" jobs are temporarily locked by their corresponding "small file" jobs until the small files are done. Test Setup 2: 12 job files each file contains one of the folder pairs just described. The last 6 are the same pairings but with size filters of. The first 6 are the left-right pairs with a size filter of. Test Setup 1: A single job file with 12 pairs listed. YMMV, so you might need to select a different boundary.)Ĭontrol Setup: A single job file with the 6 folder pairs listed, with no size filters in place (other than an upper limit of 64mb, an artifact of my testing environment on a VPN all my files larger than 64mb need to wait until I'm in my office with a gigabit ethernet connection.) (This size is based on a brief statistical analysis of my file sizes and connection speed. I've allowed a 1 kb overlap to avoid accidentally excluding any files. I've selected 200mb as my arbitrary boundary between "small" files and "large" files. FFS has been set to ignore symbolic links, ignore errors, and permanently delete files. There are no overlaps between any of the directory trees regardless of whether they are sources or destinations. There are 6 folder pairs to be updated, left to right. The user wants to get as many of the smallest files done first, in case the connection gets interrupted or the FFS job has to be aborted for some reason. Use Case: The user is a mobile or remote worker who needs to periodically back up files from their local disk to one or more network drives, across a VPN or other subjectively "slow" connection. Optimizations that FFS already currently performs. Same results as the feature requests, without losing the disk usage size These tests and the resulting strategy allow the user to achieve nearly the

](.) Also see feature requestģ444554 which is about the same issue (ĭetail&aid=3444554).](

Regarding Feature Request ID: 3482558 (Optimized Order of Sync Actions) This has been a result of a long discussion with Zenju Speeding up FreeFileSync's performance when backing up files across a slow This forum entry is to pass along some interesting results I've achieved in

0 kommentar(er)

0 kommentar(er)